Reliability: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

{{stub}} | {{stub}} | ||

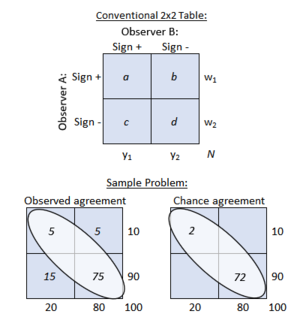

Interobserver reliability is the agreement between two observers on the findings of a given diagnostic test. The 2x2 table is used for evaluating reliability with tests with two possible categorical outcomes (yes/no, positive/negative etc). The table can be used to calculate a simple measure of agreement called the observed agreement (''p{{sub|o}}'') | Interobserver reliability is the agreement between two observers on the findings of a given diagnostic test. The 2x2 table is used for evaluating reliability with tests with two possible categorical outcomes (yes/no, positive/negative etc). The table can be used to calculate a simple measure of agreement called the observed agreement (''p{{sub|o}}''). | ||

A hypothetical illustration of why chance is important is provided. Observer A and observer B agree by chance in 60% of cases. We want to know their agreement in the remaining 40% of cases. Let's say their observed agreement overall is 80%, we need to subtract the 60% that is due to chance, leaving 20% agreement above chance. Now we had 40% remaining in total above chance, but the observers only agreed 20% of the time above chance. Therefore the true agreement is {{sfrac|20%|40%}}, i.e. 50%, much less than the observed 80%. | {| class="wikitable" | ||

! {{diagonal split header|1= A |2= B }} | |||

! Yes | |||

! No | |||

|- | |||

! Yes | |||

| a | |||

| b | |||

|- | |||

! No | |||

| c | |||

| d | |||

|} | |||

However the observed agreement doesn't take into account chance, and what we really want to know is the agreement beyond chance. A hypothetical illustration of why chance is important is provided. Observer A and observer B agree by chance in 60% of cases. We want to know their agreement in the remaining 40% of cases. Let's say their observed agreement overall is 80%, we need to subtract the 60% that is due to chance, leaving 20% agreement above chance. Now we had 40% remaining in total above chance, but the observers only agreed 20% of the time above chance. Therefore the true agreement is {{sfrac|20%|40%}}, i.e. 50%, much less than the observed 80%. | |||

==Cohen's kappa== | ==Cohen's kappa== | ||

Revision as of 20:37, 26 May 2021

Interobserver reliability is the agreement between two observers on the findings of a given diagnostic test. The 2x2 table is used for evaluating reliability with tests with two possible categorical outcomes (yes/no, positive/negative etc). The table can be used to calculate a simple measure of agreement called the observed agreement (po).

B A

|

Yes | No |

|---|---|---|

| Yes | a | b |

| No | c | d |

However the observed agreement doesn't take into account chance, and what we really want to know is the agreement beyond chance. A hypothetical illustration of why chance is important is provided. Observer A and observer B agree by chance in 60% of cases. We want to know their agreement in the remaining 40% of cases. Let's say their observed agreement overall is 80%, we need to subtract the 60% that is due to chance, leaving 20% agreement above chance. Now we had 40% remaining in total above chance, but the observers only agreed 20% of the time above chance. Therefore the true agreement is 20%/40%, i.e. 50%, much less than the observed 80%.

Cohen's kappa

Cohen's kappa (κ) coefficient is a commonly used mathematical method of taking into account chance. The κ value ranges from 0 to 1, but it may be negative if there is marked disagreement between -1 and 0. The score gives some sort of indicator as to the interobserver reliability by taking into account chance. The numbers are often assigned qualitative descriptors to facilitate understanding. Good tests have a kappa score of at least 0.6. Kappa scores have certain limitations, especially when the prevalence of the condition tested is very high or very low, but it is still a very useful statistic.

| Description | Kappa Value |

|---|---|

| Very good | 0.8 - 1.0 |

| Good | 0.6 - 0.8 |

| Moderate | 0.4 - 0.6 |

| Slight | 0.2 - 0.4 |

| Poor | 0.0 - 0.2 |

Mathematics

If two observers examine the same patients independently this can be displayed in a 2 x 2 table. Observer A finds a positive sign in w1 patients, and negative sign in w2 patients. Observer B finds a positive sign in y1 patients and negative sign in y2 patients. The two observers agree that the sign if positive in a patients, and is negative in d patients. The observed agreement (po) is calculated:

- po= (a + d)/N

To calculate the κ-statistic the first step is calculating the agreement that would have occurred simply due to chance. Observer A found that among all patients W1/N have the sign. Observer B finds that the patients y1 have the sign, and among y1 patients Observer A would find the sign by chance in (w1y1/N) patients. This number represents the number of patients in which both observers agree by chance. Likewise, both observers would agree that the sign is negative by chance in (w2y2/N) patients.

The expected chance of agreement (pe) is the sum of these two values, divided by N.

- pe = (w1y1 + w2y2)/N2

If both observers agree that a finding is rare (approaching 0) or very common (approaching N) then the expected chance of agreement (pe) approaches 100%

The κ-statistic is the difference in observed agreement (po) and the expected chance of agreement (pe) x (po - pe), divided by the maximal increment that could have been observed if the observed agreement (po) was 100% (1 - pe)

- κ = (po − pe)/(1 − pe)

The sample problem in the figure represents a fictional study of 100 patients with ischial tuberosity tenderness. Both observers agree that tenderness is present in 5 patients and absent in 75 patients.

- Observed agreement (po) is (5 + 75)/100 = 0.8.

- By chance the observers would agree the sign was present in ((5+5) x (15+5))/100 patients = 2 patients

- By chance the observers would agree the sign was not present in ((75+15) x (75+5))/100 patients = 72 patients.

- Expected chance of agreement (pe) is (2 + 72)/100 patients = 0.74.

- The κ-statistic is (0.80 – 0.74)/(1 – 0.74) = (0.06)/(0.26) = 0.23.

Bibliography

- McGee, Steven R. Evidence-based physical diagnosis. Philadelphia: Elsevier/Saunders, 2012.