Validity: Difference between revisions

No edit summary |

No edit summary |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{Partial}} | {{Curriculum}}{{Partial}} | ||

To make | For a test to be ''useful'' it must be both [[Reliability|reliable]] and valid. Validity means that it does what it is purported to do. The validity of a test is determined by seeing how well it does compared to a senior standard (criterion standard, gold standard). To make decisions about patient care the doctor must also comprehend the pre-test probability of the disease in question. | ||

==Benefits of Diagnostic Testing== | |||

[[File:Diagnostic accuracy table.png|thumb|right|400px|Diagnostic testing accuracy and the 2x2 table]] | |||

The utilization of diagnostic tests in patient care settings must be guided by evidence. Unfortunately, many order tests without considering the evidence to support them. Sensitivity and specificity are essential indicators of test accuracy and allow healthcare providers to determine the appropriateness of the diagnostic tool. Providers should utilize diagnostic tests with the proper level of confidence in the results derived from known sensitivity, specificity, positive predictive values (PPV), negative predictive values (NPV), positive likelihood ratios, and negative likelihood ratios. | The utilization of diagnostic tests in patient care settings must be guided by evidence. Unfortunately, many order tests without considering the evidence to support them. Sensitivity and specificity are essential indicators of test accuracy and allow healthcare providers to determine the appropriateness of the diagnostic tool. Providers should utilize diagnostic tests with the proper level of confidence in the results derived from known sensitivity, specificity, positive predictive values (PPV), negative predictive values (NPV), positive likelihood ratios, and negative likelihood ratios. | ||

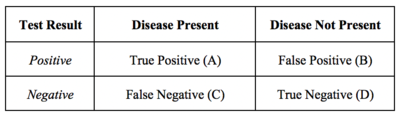

The presentation of diagnostic | The presentation of diagnostic test results is often in 2x2 tables. The values within this table can help to determine sensitivity, specificity, predictive values, and likelihood ratios. A diagnostic test’s validity, or its ability to measure what it is intended to, is determined by sensitivity and specificity. | ||

==Sensitivity== | |||

Sensitivity is the proportion of true positives tests out of all patients with a condition. In other words, it is the ability of a test or instrument to yield a positive result for a subject that has that disease. The ability to correctly classify a test is essential, and the equation for sensitivity is the following: | Sensitivity is the proportion of true positives tests out of all patients with a condition. In other words, it is the ability of a test or instrument to yield a positive result for a subject that has that disease. The ability to correctly classify a test is essential, and the equation for sensitivity is the following: | ||

<math>Sensitivity=\frac{True Positives (A)}{True Positives (A)+False Negatives (C)}</math> | |||

Sensitivity does not allow providers to understand individuals who tested positive but did not have the disease. False positives are a consideration through measurements of specificity and PPV. | Sensitivity does not allow providers to understand individuals who tested positive but did not have the disease. False positives are a consideration through measurements of specificity and PPV. | ||

==Specificity== | |||

Specificity is the percentage of true negatives out of all subjects who do not have a disease or condition . In other words, it is the ability of the test or instrument to obtain normal range or negative results for a person who does not have a disease. The formula to determine specificity is the following: | Specificity is the percentage of true negatives out of all subjects who do not have a disease or condition . In other words, it is the ability of the test or instrument to obtain normal range or negative results for a person who does not have a disease. The formula to determine specificity is the following: | ||

<math>Specificity=\frac{True Negatives (D)}{True Negatives (D)+False Positives (B)}</math> | |||

Sensitivity and specificity are inversely related: as sensitivity increases, specificity ''tends to decrease'', and vice versa. Highly sensitive tests will lead to positive findings for patients with a disease, whereas highly specific tests will show patients without a finding having no disease. Sensitivity and specificity should always merit consideration together to provide a holistic picture of a diagnostic test. Next, it is important to understand PPVs and NPVs. | Sensitivity and specificity are inversely related: as sensitivity increases, specificity ''tends to decrease'', and vice versa. Highly sensitive tests will lead to positive findings for patients with a disease, whereas highly specific tests will show patients without a finding having no disease. Sensitivity and specificity should always merit consideration together to provide a holistic picture of a diagnostic test. Next, it is important to understand PPVs and NPVs. | ||

==PPV and NPV== | |||

PPVs determine, out of all of the positive findings, how many are true positives; NPVs determine, out of all of the negative findings, how many are true negatives. As the value increases toward 100, it approaches a ‘gold standard.’ The formulas for PPV and NPV are below. | PPVs determine, out of all of the positive findings, how many are true positives; NPVs determine, out of all of the negative findings, how many are true negatives. As the value increases toward 100, it approaches a ‘gold standard.’ The formulas for PPV and NPV are below. | ||

<math>Positive Predictive Value=\frac{True Positives (A)}{True Positives (A)+False Positives (B)}</math> | |||

<math>Negative Predictive Value=\frac{True Negatives (A)}{True Negatives (D)+False Negatives(B)}</math> | |||

Disease prevalence in a population affects PPV and NPV. When a disease is highly prevalent, the test is better at ‘ruling in' the disease and worse at ‘ruling it out.’ Therefore, disease prevalence should also merit consideration when providers examine their diagnostic test metrics or interpret these values from other providers or researchers. Providers should consider the sample when reviewing research that presents these values and understand that the values within their population may differ. Considering all of the diagnostic test outputs, issues with results (e.g., very low specificity) may make clinicians reconsider clinical acceptability, and alternative diagnostic methods or tests should be considered. | Disease prevalence in a population affects PPV and NPV. When a disease is highly prevalent, the test is better at ‘ruling in' the disease and worse at ‘ruling it out.’ Therefore, disease prevalence should also merit consideration when providers examine their diagnostic test metrics or interpret these values from other providers or researchers. Providers should consider the sample when reviewing research that presents these values and understand that the values within their population may differ. Considering all of the diagnostic test outputs, issues with results (e.g., very low specificity) may make clinicians reconsider clinical acceptability, and alternative diagnostic methods or tests should be considered. | ||

==Likelihood Ratios== | |||

Using sensitivity and specificity has one significant drawback, they are not constant properties of a test, but are critically dependent on the ''prevalence'' of the condition in the population being studied. To overcome this, likelihood ratios are used. | |||

Likelihood ratios (LRs) represent another statistical tool to understand diagnostic tests. LRs allow providers to determine how much the utilization of a particular test will alter the probability. A positive likelihood ratio, or LR+, is the “probability that a positive test would be expected in a patient divided by the probability that a positive test would be expected in a patient without a disease.”. In other words, an LR+ is the true positivity rate divided by the false positivity rate . A negative likelihood ratio or LR-, is “the probability of a patient testing negative who has a disease divided by the probability of a patient testing negative who does not have a disease.”. Unlike predictive values, and similar to sensitivity and specificity, likelihood ratios are not impacted by disease prevalence. The formulas for the likelihood ratios are below. | Likelihood ratios (LRs) represent another statistical tool to understand diagnostic tests. LRs allow providers to determine how much the utilization of a particular test will alter the probability. A positive likelihood ratio, or LR+, is the “probability that a positive test would be expected in a patient divided by the probability that a positive test would be expected in a patient without a disease.”. In other words, an LR+ is the true positivity rate divided by the false positivity rate . A negative likelihood ratio or LR-, is “the probability of a patient testing negative who has a disease divided by the probability of a patient testing negative who does not have a disease.”. Unlike predictive values, and similar to sensitivity and specificity, likelihood ratios are not impacted by disease prevalence. The formulas for the likelihood ratios are below. | ||

<math>Positive Likelihood Ratio=\frac{Sensitivity}{(1-Specificity)}</math> | |||

<math>Negative Predictive Value=\frac{(1- Sensitivity)}{Specificity}</math> | |||

Now that these topics have been covered completely, the application exercise will calculate sensitivity, specificity, predictive values, and likelihood ratios. | Now that these topics have been covered completely, the application exercise will calculate sensitivity, specificity, predictive values, and likelihood ratios. | ||

==Example== | |||

Example: A healthcare provider utilizes a blood test to determine whether or not patients will have a disease. | Example: A healthcare provider utilizes a blood test to determine whether or not patients will have a disease. | ||

| Line 60: | Line 59: | ||

Let’s calculate the sensitivity, specificity, PPV, NPV, LR+, and LR-. We first can start with a 2X2 Table. The information above allows us to enter the values in the table below. Notice that values in blue cells were not provided, but we can get them based on the numbers above and the utilization of total cells. | Let’s calculate the sensitivity, specificity, PPV, NPV, LR+, and LR-. We first can start with a 2X2 Table. The information above allows us to enter the values in the table below. Notice that values in blue cells were not provided, but we can get them based on the numbers above and the utilization of total cells. | ||

The provider found that a total of 384 individuals actually had the disease, but how accurate was the blood test? | The provider found that a total of 384 individuals actually had the disease, but how accurate was the blood test? | ||

| Line 96: | Line 93: | ||

''Positive Likelihood Ratio'' | ''Positive Likelihood Ratio'' | ||

* Positive Likelihood Ratio=Sensitivity/(1-Specificity) | * Positive Likelihood Ratio=Sensitivity/(1-Specificity) | ||

* Positive Likelihood Ratio=0.961/(1-0.906) | * Positive Likelihood Ratio=0.961/(1-0.906) | ||

* Positive Likelihood Ratio=0.961/0.094 | * Positive Likelihood Ratio=0.961/0.094 | ||

* Positive Likelihood Ratio=10.22 | * Positive Likelihood Ratio=10.22 | ||

''Negative Likelihood Ratio'' | |||

* Negative Likelihood Ratio=(1- Sensitivity)/Specificity | * Negative Likelihood Ratio=(1- Sensitivity)/Specificity | ||

* Negative Likelihood Ratio=(1- 0.961)/0.906 | * Negative Likelihood Ratio=(1- 0.961)/0.906 | ||

| Line 109: | Line 106: | ||

The results show a sensitivity of 96.1%, specificity of 90.6%, PPV of 86.4%, NPV of 97.4%, LR+ of 10.22, and LR- of 0.043. | The results show a sensitivity of 96.1%, specificity of 90.6%, PPV of 86.4%, NPV of 97.4%, LR+ of 10.22, and LR- of 0.043. | ||

== Clinical Significance == | |||

Understanding that other diagnostic test data techniques do exist (e.g., receiver operating characteristic curves), the topics in this article represent essential starting points for healthcare providers. Diagnostic testing is a crucial component of evidence-based patient care. When determining whether or not to use a diagnostic test, providers should consider the benefits and risks of the test, as well as the diagnostic accuracy. By having a foundational understanding of the interpretation of sensitivity, specificity, predictive values, and likelihood ratios, healthcare providers will understand outputs from current and new diagnostic assessments, aiding in decision-making and ultimately improving healthcare for patients. | Understanding that other diagnostic test data techniques do exist (e.g., receiver operating characteristic curves), the topics in this article represent essential starting points for healthcare providers. Diagnostic testing is a crucial component of evidence-based patient care. When determining whether or not to use a diagnostic test, providers should consider the benefits and risks of the test, as well as the diagnostic accuracy. By having a foundational understanding of the interpretation of sensitivity, specificity, predictive values, and likelihood ratios, healthcare providers will understand outputs from current and new diagnostic assessments, aiding in decision-making and ultimately improving healthcare for patients. | ||

== | ==Resources== | ||

{{PDF|On Validity - Bogduk 2022.pdf}} | |||

==References== | ==References== | ||

Latest revision as of 19:00, 11 October 2022

For a test to be useful it must be both reliable and valid. Validity means that it does what it is purported to do. The validity of a test is determined by seeing how well it does compared to a senior standard (criterion standard, gold standard). To make decisions about patient care the doctor must also comprehend the pre-test probability of the disease in question.

Benefits of Diagnostic Testing

The utilization of diagnostic tests in patient care settings must be guided by evidence. Unfortunately, many order tests without considering the evidence to support them. Sensitivity and specificity are essential indicators of test accuracy and allow healthcare providers to determine the appropriateness of the diagnostic tool. Providers should utilize diagnostic tests with the proper level of confidence in the results derived from known sensitivity, specificity, positive predictive values (PPV), negative predictive values (NPV), positive likelihood ratios, and negative likelihood ratios.

The presentation of diagnostic test results is often in 2x2 tables. The values within this table can help to determine sensitivity, specificity, predictive values, and likelihood ratios. A diagnostic test’s validity, or its ability to measure what it is intended to, is determined by sensitivity and specificity.

Sensitivity

Sensitivity is the proportion of true positives tests out of all patients with a condition. In other words, it is the ability of a test or instrument to yield a positive result for a subject that has that disease. The ability to correctly classify a test is essential, and the equation for sensitivity is the following:

[math]\displaystyle{ Sensitivity=\frac{True Positives (A)}{True Positives (A)+False Negatives (C)} }[/math]

Sensitivity does not allow providers to understand individuals who tested positive but did not have the disease. False positives are a consideration through measurements of specificity and PPV.

Specificity

Specificity is the percentage of true negatives out of all subjects who do not have a disease or condition . In other words, it is the ability of the test or instrument to obtain normal range or negative results for a person who does not have a disease. The formula to determine specificity is the following:

[math]\displaystyle{ Specificity=\frac{True Negatives (D)}{True Negatives (D)+False Positives (B)} }[/math]

Sensitivity and specificity are inversely related: as sensitivity increases, specificity tends to decrease, and vice versa. Highly sensitive tests will lead to positive findings for patients with a disease, whereas highly specific tests will show patients without a finding having no disease. Sensitivity and specificity should always merit consideration together to provide a holistic picture of a diagnostic test. Next, it is important to understand PPVs and NPVs.

PPV and NPV

PPVs determine, out of all of the positive findings, how many are true positives; NPVs determine, out of all of the negative findings, how many are true negatives. As the value increases toward 100, it approaches a ‘gold standard.’ The formulas for PPV and NPV are below.

[math]\displaystyle{ Positive Predictive Value=\frac{True Positives (A)}{True Positives (A)+False Positives (B)} }[/math]

[math]\displaystyle{ Negative Predictive Value=\frac{True Negatives (A)}{True Negatives (D)+False Negatives(B)} }[/math]

Disease prevalence in a population affects PPV and NPV. When a disease is highly prevalent, the test is better at ‘ruling in' the disease and worse at ‘ruling it out.’ Therefore, disease prevalence should also merit consideration when providers examine their diagnostic test metrics or interpret these values from other providers or researchers. Providers should consider the sample when reviewing research that presents these values and understand that the values within their population may differ. Considering all of the diagnostic test outputs, issues with results (e.g., very low specificity) may make clinicians reconsider clinical acceptability, and alternative diagnostic methods or tests should be considered.

Likelihood Ratios

Using sensitivity and specificity has one significant drawback, they are not constant properties of a test, but are critically dependent on the prevalence of the condition in the population being studied. To overcome this, likelihood ratios are used.

Likelihood ratios (LRs) represent another statistical tool to understand diagnostic tests. LRs allow providers to determine how much the utilization of a particular test will alter the probability. A positive likelihood ratio, or LR+, is the “probability that a positive test would be expected in a patient divided by the probability that a positive test would be expected in a patient without a disease.”. In other words, an LR+ is the true positivity rate divided by the false positivity rate . A negative likelihood ratio or LR-, is “the probability of a patient testing negative who has a disease divided by the probability of a patient testing negative who does not have a disease.”. Unlike predictive values, and similar to sensitivity and specificity, likelihood ratios are not impacted by disease prevalence. The formulas for the likelihood ratios are below.

[math]\displaystyle{ Positive Likelihood Ratio=\frac{Sensitivity}{(1-Specificity)} }[/math]

[math]\displaystyle{ Negative Predictive Value=\frac{(1- Sensitivity)}{Specificity} }[/math]

Now that these topics have been covered completely, the application exercise will calculate sensitivity, specificity, predictive values, and likelihood ratios.

Example

Example: A healthcare provider utilizes a blood test to determine whether or not patients will have a disease.

The results are the following:

- A total of 1,000 individuals had their blood tested.

- Four hundred twenty-seven individuals had positive findings, and 573 individuals had negative findings.

- Out of the 427 individuals who had positive findings, 369 of them had the disease.

- Out of the 573 individuals who had negative findings, 558 did not have the disease.

Let’s calculate the sensitivity, specificity, PPV, NPV, LR+, and LR-. We first can start with a 2X2 Table. The information above allows us to enter the values in the table below. Notice that values in blue cells were not provided, but we can get them based on the numbers above and the utilization of total cells.

The provider found that a total of 384 individuals actually had the disease, but how accurate was the blood test?

Results:

Sensitivity

- Sensitivity=(True Positives (A))/(True Positives (A)+False Negatives (C))

- Sensitivity=(369 (A))/(369(A)+15 (C))

- Sensitivity=369/384

- Sensitivity=0.961

Specificity

- Specificity=(True Negatives (D))/(True Negatives (D)+False Positives (B))

- Specificity=(558 (D))/(558(D)+58 (B))

- Specificity=558/616

- Specificity=0.906

Positive Predictive Value

- PPV =(True Positives (A))/(True Positives (A)+False Positives (B))

- PPV =(369 (A))/(369 (A)+58(B))

- PPV =369/427

- PPV =0.864

Negative Predictive Value

- NPV=(True Negatives (D))/(True Negatives (D)+False Negatives(C))

- NPV=(558(D))/(558 (D)+15(C))

- NPV=(558 )/573

- NPV=0.974

Positive Likelihood Ratio

- Positive Likelihood Ratio=Sensitivity/(1-Specificity)

- Positive Likelihood Ratio=0.961/(1-0.906)

- Positive Likelihood Ratio=0.961/0.094

- Positive Likelihood Ratio=10.22

Negative Likelihood Ratio

- Negative Likelihood Ratio=(1- Sensitivity)/Specificity

- Negative Likelihood Ratio=(1- 0.961)/0.906

- Negative Likelihood Ratio=0.039/0.906

- Negative Likelihood Ratio=0.043

The results show a sensitivity of 96.1%, specificity of 90.6%, PPV of 86.4%, NPV of 97.4%, LR+ of 10.22, and LR- of 0.043.

Clinical Significance

Understanding that other diagnostic test data techniques do exist (e.g., receiver operating characteristic curves), the topics in this article represent essential starting points for healthcare providers. Diagnostic testing is a crucial component of evidence-based patient care. When determining whether or not to use a diagnostic test, providers should consider the benefits and risks of the test, as well as the diagnostic accuracy. By having a foundational understanding of the interpretation of sensitivity, specificity, predictive values, and likelihood ratios, healthcare providers will understand outputs from current and new diagnostic assessments, aiding in decision-making and ultimately improving healthcare for patients.

Resources

References

Part or all of this article or section is derived from Diagnostic Testing Accuracy: Sensitivity, Specificity, Predictive Values and Likelihood Ratios by Jacob Shreffler; Martin R. Huecker., used under CC BY 4.0