Power: Difference between revisions

No edit summary |

mNo edit summary |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{partial}} | |||

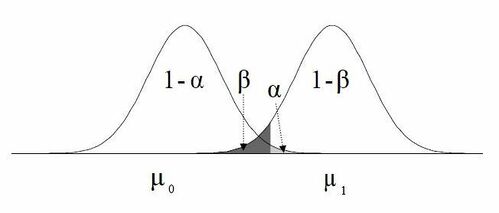

[[File:Statistical Power.jpg|thumb|499x499px|'''Figure 1.''' Statistical power is the probability of rejecting a false null hypothesis (1 - β). μ0 is the mean of the null hypothesis, μ1 is the mean of the alternative hypothesis. α is the type 1 error rate level. β is the type 2 error rate level.]] | |||

During an experiment two samples are compared which each are supposed to represent two populations. Errors can occur that lead to problems with generalising the results to the general population. A vignette that illustrates the errors is the Boy Who Cried Wolf. First, the citizens make a type I error by believing there is a wolf when there is not. Second, the citizens commit a type II error by believing there is no wolf when there is one. | |||

== | == Null Hypothesis and Type 1 Errors == | ||

In comparing two samples of cholesterol measurements between employed and unemployed people, we test the hypothesis that the two samples came from the same population of cholesterol measurements. The '''null hypothesis''' is the hypothesis that there is no difference between the cholesterol measurements between these two samples. | |||

'' | The phrase 'no difference' has set limits within we consider the samples as not having any significant difference. If the limits are set at twice the standard error, and we have a mean outside this range, then either an unusual event has happened or the null hypothesis is incorrect. 'Unusual event' means that we will be wrong approximately 1 in 20 times if the null hypothesis was actually true. | ||

Rejecting the null hypothesis when it is actually true is called a '''type I error'''. The type I error rate is the level at which a result is declared significant, and this is denoted by the symbol α. In other words, if the difference is greater than this limit then the result is regarded as significant, and the null hypothesis is unlikely. If a difference is below this limit then the result is regarded as non-significant and the null hypothesis is likely. | |||

A | ''A Type I error is when a difference is found but it isn't a real difference (false positive).'' | ||

== Alternative Hypothesis, Type II Errors, == | |||

When we compare two groups, a non-significant result doesn't mean we have proved that the two samples came from the same population. It actually means we have failed to prove that they do ''not'' come from the same population. The study hypothesis otherwise known as the alternative hypothesis is a difference we would like to demonstrate in a study between two groups, a difference that would be clinically worthwhile. | |||

If we do not reject the null hypothesis but there is actually a difference between the two groups, then this is called a '''type II error''', and this is often denoted using the symbol β. | |||

''A type II error is when a difference should be there but wasn't detected in the experiment (false negative)'' | |||

== Power == | |||

A concept closely aligned to type II error is statistical power. Power is the ability to correctly reject a null hypothesis that is indeed false. Unfortunately, many studies lack sufficient power and should be presented as having inconclusive findings. | |||

The power of a study is the complement of the probability of β, i.e. 1 - β. This complement is the probability of rejecting the null hypothesis when it is false. | |||

The power of a statistical test is dependent on: the level of significance set by the researcher, the sample size, and the effect size or the extent to which the groups differ based on treatment. Statistical power is critical for healthcare providers to decide how many patients to enrol in clinical studies. Power is strongly associated with sample size; when the sample size is large, power will generally not be an issue. Thus, when conducting a study with a low sample size, and ultimately low power, researchers should be aware of the likelihood of a type II error. The greater the N within a study, the more likely it is that a researcher will reject the null hypothesis. The concern with this approach is that a very large sample could show a statistically significant finding due to the ability to detect small differences in the dataset; thus, utilization of p values alone based on a large sample can be troublesome. | |||

It is essential to recognize that power can be deemed adequate with a smaller sample ''if the'' effect size is large. What is an acceptable level of power? Many researchers agree upon a power of 80% or higher as credible enough for determining the actual effects of research studies. Ultimately, studies with lower power will find fewer true effects than studies with higher power; thus, clinicians should be aware of the likelihood of a power issue resulting in a type II error. Unfortunately, many researchers, and providers who assess medical literature, do not scrutinize power analyses. Studies with low power may inhibit future work as they lack the ability to detect actual effects with variables; this could lead to potential impacts remaining undiscovered or noted as not effective when they may be. | |||

Medical researchers should invest time in conducting power analyses to sufficiently distinguish a difference or association. Luckily, there are many tables of power values as well as statistical software packages that can help to determine study power and guide researchers in study design and analysis. If choosing to utilize statistical software to calculate power, the following are necessary for entry: the predetermined alpha level, proposed sample size, and effect size the investigator(s) is aiming to detect. By utilizing power calculations on the front end, researchers can determine adequate sample size to compute effect, and determine based on statistical findings; sufficient power was actually observed. | |||

== Example == | |||

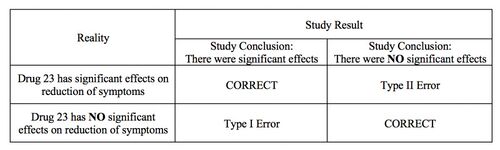

[[File:Type I and type II statistical errors.jpg|thumb|right|500px|'''Figure 2.''' Type I and Type II Errors and Statistical Power.<br><small>Contributed by Martin Huecker, MD and Jacob Shreffler, PhD</small>]] | |||

A type I error occurs when in research when we reject the null hypothesis and erroneously state that the study found significant differences when there indeed was no difference. In other words, it is equivalent to saying that the groups or variables differ when, in fact, they do not or having false positives. An example of a research hypothesis is below: | |||

Drug 23 will ''significantly reduce symptoms associated with Disease A'' compared to Drug 22. | |||

For our example, if we were to state that Drug 23 significantly reduced symptoms of Disease A compared to Drug 22 when it did not, this would be a type I error. Committing a type I error can be very grave in specific scenarios. For example, if we did, move ahead with Drug 23 based on our research findings even though there was actually was no difference between groups, and the drug costs significantly more money for patients or has more side effects, then we would raise healthcare costs, cause iatrogenic harm, and not improve clinical outcomes. If a p-value is used to examine type I error, the lower the p-value, the lower the likelihood of the type I error to occur. | |||

A type II error occurs when we declare no differences or associations between study groups when, in fact, there was. As with type I errors, type II errors in certain cause problems. Picture an example with a new, less invasive surgical technique that was developed and tested in comparison to the more invasive standard care. Researchers would seek to show no differences between patients receiving the two treatment methods in health outcomes (noninferiority study). If, however, the less invasive procedure resulted in less favourable health outcomes, it would be a severe error. The figure provides a depiction of type I and type II errors. | |||

== See Also == | |||

*[[Hypothesis Testing, P Values, Confidence Intervals, and Significance]] | |||

*[[Statistical Significance]] | |||

==References== | ==References== | ||

{{Article derivation | {{Article derivation | ||

Latest revision as of 21:11, 4 March 2022

During an experiment two samples are compared which each are supposed to represent two populations. Errors can occur that lead to problems with generalising the results to the general population. A vignette that illustrates the errors is the Boy Who Cried Wolf. First, the citizens make a type I error by believing there is a wolf when there is not. Second, the citizens commit a type II error by believing there is no wolf when there is one.

Null Hypothesis and Type 1 Errors

In comparing two samples of cholesterol measurements between employed and unemployed people, we test the hypothesis that the two samples came from the same population of cholesterol measurements. The null hypothesis is the hypothesis that there is no difference between the cholesterol measurements between these two samples.

The phrase 'no difference' has set limits within we consider the samples as not having any significant difference. If the limits are set at twice the standard error, and we have a mean outside this range, then either an unusual event has happened or the null hypothesis is incorrect. 'Unusual event' means that we will be wrong approximately 1 in 20 times if the null hypothesis was actually true.

Rejecting the null hypothesis when it is actually true is called a type I error. The type I error rate is the level at which a result is declared significant, and this is denoted by the symbol α. In other words, if the difference is greater than this limit then the result is regarded as significant, and the null hypothesis is unlikely. If a difference is below this limit then the result is regarded as non-significant and the null hypothesis is likely.

A Type I error is when a difference is found but it isn't a real difference (false positive).

Alternative Hypothesis, Type II Errors,

When we compare two groups, a non-significant result doesn't mean we have proved that the two samples came from the same population. It actually means we have failed to prove that they do not come from the same population. The study hypothesis otherwise known as the alternative hypothesis is a difference we would like to demonstrate in a study between two groups, a difference that would be clinically worthwhile.

If we do not reject the null hypothesis but there is actually a difference between the two groups, then this is called a type II error, and this is often denoted using the symbol β.

A type II error is when a difference should be there but wasn't detected in the experiment (false negative)

Power

A concept closely aligned to type II error is statistical power. Power is the ability to correctly reject a null hypothesis that is indeed false. Unfortunately, many studies lack sufficient power and should be presented as having inconclusive findings.

The power of a study is the complement of the probability of β, i.e. 1 - β. This complement is the probability of rejecting the null hypothesis when it is false.

The power of a statistical test is dependent on: the level of significance set by the researcher, the sample size, and the effect size or the extent to which the groups differ based on treatment. Statistical power is critical for healthcare providers to decide how many patients to enrol in clinical studies. Power is strongly associated with sample size; when the sample size is large, power will generally not be an issue. Thus, when conducting a study with a low sample size, and ultimately low power, researchers should be aware of the likelihood of a type II error. The greater the N within a study, the more likely it is that a researcher will reject the null hypothesis. The concern with this approach is that a very large sample could show a statistically significant finding due to the ability to detect small differences in the dataset; thus, utilization of p values alone based on a large sample can be troublesome.

It is essential to recognize that power can be deemed adequate with a smaller sample if the effect size is large. What is an acceptable level of power? Many researchers agree upon a power of 80% or higher as credible enough for determining the actual effects of research studies. Ultimately, studies with lower power will find fewer true effects than studies with higher power; thus, clinicians should be aware of the likelihood of a power issue resulting in a type II error. Unfortunately, many researchers, and providers who assess medical literature, do not scrutinize power analyses. Studies with low power may inhibit future work as they lack the ability to detect actual effects with variables; this could lead to potential impacts remaining undiscovered or noted as not effective when they may be.

Medical researchers should invest time in conducting power analyses to sufficiently distinguish a difference or association. Luckily, there are many tables of power values as well as statistical software packages that can help to determine study power and guide researchers in study design and analysis. If choosing to utilize statistical software to calculate power, the following are necessary for entry: the predetermined alpha level, proposed sample size, and effect size the investigator(s) is aiming to detect. By utilizing power calculations on the front end, researchers can determine adequate sample size to compute effect, and determine based on statistical findings; sufficient power was actually observed.

Example

A type I error occurs when in research when we reject the null hypothesis and erroneously state that the study found significant differences when there indeed was no difference. In other words, it is equivalent to saying that the groups or variables differ when, in fact, they do not or having false positives. An example of a research hypothesis is below:

Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

For our example, if we were to state that Drug 23 significantly reduced symptoms of Disease A compared to Drug 22 when it did not, this would be a type I error. Committing a type I error can be very grave in specific scenarios. For example, if we did, move ahead with Drug 23 based on our research findings even though there was actually was no difference between groups, and the drug costs significantly more money for patients or has more side effects, then we would raise healthcare costs, cause iatrogenic harm, and not improve clinical outcomes. If a p-value is used to examine type I error, the lower the p-value, the lower the likelihood of the type I error to occur.

A type II error occurs when we declare no differences or associations between study groups when, in fact, there was. As with type I errors, type II errors in certain cause problems. Picture an example with a new, less invasive surgical technique that was developed and tested in comparison to the more invasive standard care. Researchers would seek to show no differences between patients receiving the two treatment methods in health outcomes (noninferiority study). If, however, the less invasive procedure resulted in less favourable health outcomes, it would be a severe error. The figure provides a depiction of type I and type II errors.

See Also

References

Part or all of this article or section is derived from Type I and Type II Errors and Statistical Power by Jacob Shreffler; Martin R. Huecker., used under CC BY 4.0